David Freedman has a new book out with the somewhat unwieldy title of

WRONG: Why experts keep failing us--and how to know when not to trust them. Mr Freedman's premise is that virtually all (if not, in fact, all) of the information we receive from experts such as scientists, doctors, finance wizards and so on is wrong. To be fair, he also includes journalistic pundits, amongst whom he includes himself. This means that, if his thesis is true, his claims are most likely false and, therefore, the experts are actually correct, which means that he, as an expert, is also correct and that the experts are therefore wrong and....

EoR feels like he's in a scene from a bad SF movie where the super computer runs amok, only to be destroyed by the old "The next statement I make will be true. The last statement I made was false" line.

The introduction to the book is available courtesy of

The New York Times. Part of the problem with the book is that Mr Freedman equivocates about the meaning of 'expert'. He doesn't actually mean an expert (someone who is knowledgeable in a particular area) but rather

(W)hen I say “expert,” I’m mostly thinking of someone whom the mass media might quote as a credible authority on some topic — the sorts of people we’re usually referring to when we say things like “According to experts . . .” These are what I would call “mass” or “public” experts, people in a position to render opinions or findings that a large number of us might hear about and choose to take into account in making decisions that could affect our lives. Scientists are an especially important example, but I’m also interested in, for example, business, parenting, and sports experts who gain some public recognition for their experience and insight. I’ll also have some things to say about pop gurus, celebrity advice givers, and media pundits, as well as about what I call “local” experts — everyday practitioners such as non-research-oriented doctors, stockbrokers, and auto mechanics.

So, Mr Freedman is actually arguing that the statements presented in mass media are usually wrong. This is different from an argument that experts are usually wrong. Meryl Dorey, for example, is a media 'expert' (indeed, for Howard Sattler, she is the only vaccination 'expert' worthy of presenting to his radio audience) but she is not an expert in (to EoR's knowledge) any field at all.

Mr Freedman provides a

list of factoids. Most of these are misleading, inaccurate or incomplete. There may well be further support for his factoids in his book (which EoR has not read) and EoR does support the need for assessing the validity of experts (or, at least, what is usually presented as expert opinion in the mass media), but these items are flimsy:

About two-thirds of the findings published in top medical journals are refuted within a few years.

This indicates a fundamental misconception of the nature of science (and this misunderstanding also affects most of the subsequent factoids). All science is provisional (even evolution) until further research is undertaken. Also, medical journals are a subset of total science research (which is also a subset of all 'expert' advice). While Freedman focuses heavily on pharmaceutical trials, much scientific research is actually theorising and argument — it's the mass media that presents it as absolute teleological Truth.

As much as 90 percent of physicians' medical knowledge has been gauged to be substantially or completely wrong.

EoR doesn't know

what to make of this. Do the majority of physicians have no idea where the spleen is located? And what is the lvel of incorrect knowledge for homeopaths?

Economists have found that all studies published in economics journals are likely to be wrong.

Presumably, this is from a study published in an economics journal.

Nearly 100 percent of studies that find a particular type of food or a vitamin lowers the risk of disease fail to hold up.

This seems to be confusing the findings of studies with what is reported by mass media as the new superfood du jour (and which alties pick up and promote endlessly as the cure for all known diseases).

Professionally prepared tax returns are more likely to contain significant errors than self-prepared returns.

Is this because more complicated tax returns are more likely to be prepared by professionals? Is this in the US, or worldwide? What does 'more likely' mean?

In spite of $100 billion spent annually on medical research in the US, average life span here has increased by only a few years since 1978, with that small rise mostly due to a drop in smoking rates.

The US medical system is a strange beast, which may not reflect the rest of the world, but Mr Freedman has chosen to equate medical research outcomes solely against lifespan. There is no assessment of quality of life, or specific disease rates.

There is a one out of twelve chance that a doctor's diagnosis will be wrong in a way that will cause significant harm to the patient.

Which means there's a eleven in twelve chance that the doctor's diagnosis is correct, presumably. Which rather negates Mr Freedman's statment that experts are almost always wrong.

Not a single NFL coach or manager was able to spot much potential in future-record-shattering quarterback Tom Brady, leaving him nearly undrafted by the league after college.

Cherry picking a single exceptional case that was missed doesn't therefore mean that the experts are always wrong.

Genetics tests are one-tenth as accurate at predicting a person's height as guessing based on the height of the parents.

Height is a function of a number of factors, not only genetics (and genetic testing is also constantly improving). These factors would be reflected generally in the parents so an assessment based on them would probably be the better method of including all these factors.

Most major drugs don't work on 40 to 75 percent of the population.

This is difficult to assess since it doesn't include whether those drugs are intended to be used on the general population, or only a specific part where they do work. Nonetheless, how do we know that these figures are correct? Presumably, because the experts tell us (EoR's brain is hurting again here). And what about the 60 to 25 percent of the population that the drugs

do work on? Isn't that a positive thing? And how, exactly, does that prove experts are always wrong?

Hearing an expert talk impairs the brain's ability to make decisions for itself, according to brain imaging studies.

Which doesn't necessarily mean that the expert is wrong.

A drug widely prescribed for years to heart-attack victims killed more Americans than did the Vietnam war.

And we know this now because the experts tell us? Or are they also wrong?

Only about one percent of the scientific studies based on fraudulent data are identified and reported.

EoR finds this really bizarre. To claim that one per cent, the total number of fraudulent studies must be known. So why are they being ignored?

One-third of researchers admit to having committed or personally observed at least one act of research misconduct within the previous three years.

Researchers in all fields? An 'act of research misconduct' covers a wide field of misdemeanours, not all of which necessarily prove that experts are wrong.

Two-thirds of the drug-study findings that indicate a drug may cause harm are not fully reported by researchers.

This is a major concern regarding reporting of pharmaceutical testing. It doesn't mean that experts are wrong so much as that data is being selectively presented. Such actions are not acceptable, but not indicative of a general expert failure syndrome.

Ninety-five percent of medical findings are never retested.

Which doesn't necessarily mean that they're wrong (as Mr Freedman seems to be implying, unless he knows something the rest of us don't).

Half of newspaper articles contain at least one factual error.

Journalists are not experts (except, possibly, in journalism). Confusing them with such is what is really wrong with perceived 'expert failure' and why Mr Freedman appears to have limited himself to mass media experts.

Sixty percent of newspaper articles quote someone who says the reporter didn't get the story straight.

Again, journalists are at fault here. Arguing that experts are wrong because journalists misquote or misinterpret them (or simply state what they want the story to say) is confusing who made the mistake.

Three-quarters of experimental drugs found safe and effective in animal testing prove harmful or ineffective on humans.

Well, it should seem obvious that humans aren't actually mice and that a drug that works in mice isn't necessarily going to work in humans. This is something that is well known (except, possibly, to journalists and Mr Freedman). Finding out that the drugs don't work in humans isn't expert failure either, simply further research.

As many as 98 percent of medical researchers fail to fully disclose all potential conflicts of interest in published studies

This is similar to the failure to publish all pharmaceutical studies (positive as well as negative). A conflict of interest, in itself, doesn't invalidate findings, only make them more suspect and requiring closer scrutiny.

A third of the studies published in top medical journals contain statistical errors.

Which doesn't mean the experts are wrong, but fallible (and why does Mr Freedman seem to concentrate so much on medical research to support his much wider ranging conclusions?).

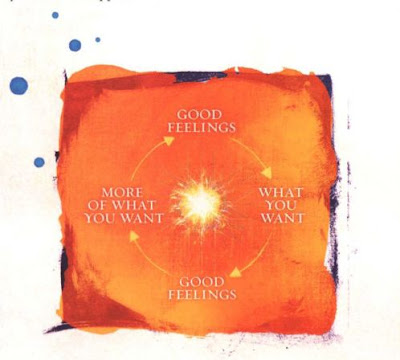

Becoming an 'expert' (in Mr Freedman's sense, though he doesn't use quote marks so the term is ambiguous) is relatively easy. Once you've been in the media (and this can be done by setting yourself up to run a seminar or release a press statement or publish a book or set up a website) the media are more likely to come back to you again (since it's easier to call on someone you already know about). This leads to a reinforcing cycle that the

person is an expert because they

keep appearing in the media commenting on various subjects, whether they have any qualifications in that area or not.

Journalists, even when they do consult suitably qualified persons, still are driven by the urge to write a sensational story. They either fail to understand what they have been told, or

change it to suit their purposes.

In both cases the real experts have not been wrong, but the media have fooled us. Rather than disregarding experts altogether, what we need are better tools to assess which experts we shoud accept, and better methods to assess what they are saying (including better training in science and critical thinking in schools).

Contemporary scientifically advanced homeopaths enabling the holistic illness journey towards spiritual enlightenment

Contemporary scientifically advanced homeopaths enabling the holistic illness journey towards spiritual enlightenment EoR, having accidentally sat on some thistles, ponders the State of Things.

EoR, having accidentally sat on some thistles, ponders the State of Things.